The following section describes how to install an Apache Airflow extra that's hosted on a private URL with authentication. An example can be a DAG representing the following workflow: task A (get data from data source, prepare it for analysis) followed by task B (analyze the prepared data, yielding a visualized report), finally to task C (send the visualized report by email to the administrator) then we have a linear DAG like this: A B C. Option three: Python dependencies hosted on a private PyPi/PEP-503 Compliant Repo This method allows you to use the same libraries offline. find-links /usr/local/airflow/plugins and -no-index without adding -constraint.

AIRFLOW 2.0 DAG EXAMPLE UPDATE

Then, update your requirements.txt preceeded by

With DAG(dag_id="create_whl_file", schedule_interval=None, catchup=False, start_date=days_ago(1)) as dag:īash_command=f"mkdir /tmp/whls pip3 download -r /usr/local/airflow/requirements/requirements.txt -d /tmp/whls zip -j /tmp/plugins.zip /tmp/whls/* aws s3 cp /tmp/plugins.zip s3:// "Īfter running the DAG, use this new file as your Amazon MWAA plugins.zip, optionally, packaged with other plugins. Uses the dagster graphql api to run and monitor dagster jobs on remote dagster infrastructure Parameters :ĭagster_conn_id ( Optional ) – the id of the dagster connection, airflow 2.From _operator import BashOperator However, with Airflow 2.0 on the horizon, there is promise of an. User_token ( Optional ) – the dagster cloud user token to useĬlass dagster_airflow. Though I'm ingesting DAG run history directly from the Airflow. Organization_id ( Optional ) – the id of the dagster cloud organizationĭeployment_name ( Optional ) – the name of the dagster cloud deployment Run_config ( Optional ]) – the run config to use for the job runĭagster_conn_id ( Optional ) – the id of the dagster connection, airflow 2.0+ only Job_name ( str) – the name of the job to run Repostitory_location_name ( str) – the name of the repostitory location to use Repository_name ( str) – the name of the repository to use Uses the dagster cloud graphql api to run and monitor dagster jobs on dagster cloud Parameters : DagsterCloudOperator ( * args, ** kwargs ) ¶ ResourceDefinition Orchestrate Dagster from Airflow ¶ class dagster_airflow. The persistent Airflow DB resource Return type : Uri – SQLAlchemy URI of the Airflow DB to be usedĭag_run_config ( Optional ) – dag_run configuration to be used when creating a DagRun make_dagster_definitions_from_airflow_dag_bag ( dag_bag, connections = None, resource_defs = ) Parameters : (ie find files that contain both b’DAG’ and b’airflow’) (default: True)Ĭonnections ( List ) – List of Airflow Connections to be created in the Airflow DBĭefinitions dagster_airflow. Safe_mode ( bool) – True to use Airflow’s default heuristic to find files that contain DAGs

AIRFLOW 2.0 DAG EXAMPLE CODE

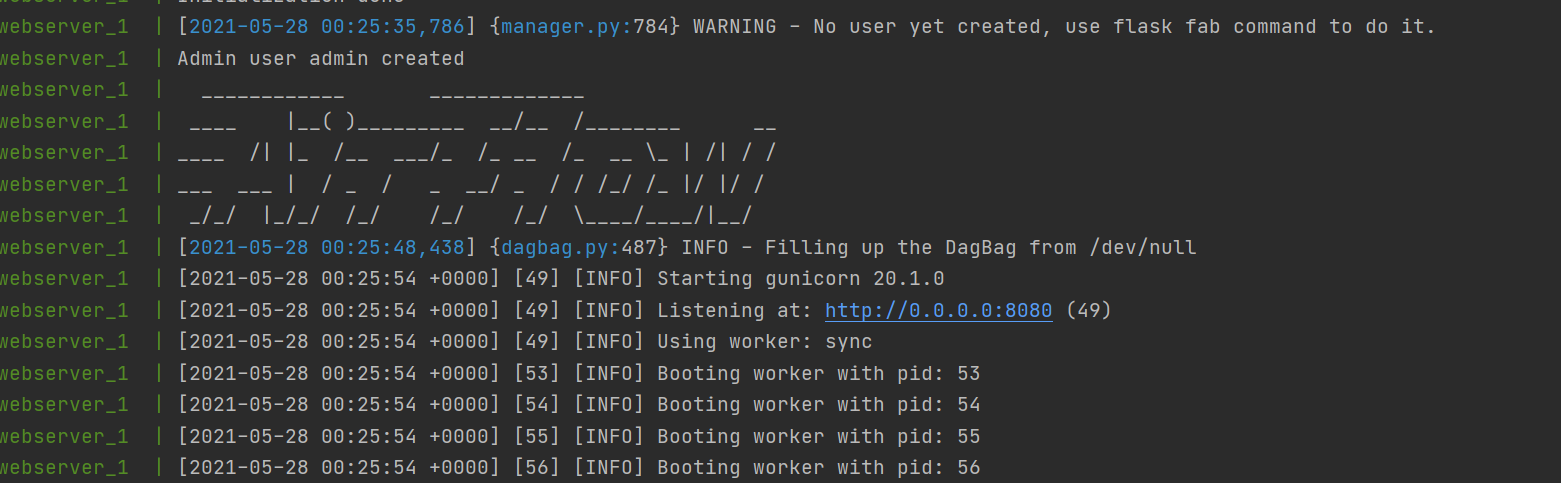

Copy paste the code in that file and execute the command docker-compose up -d in the folder docker-airflow. Create a file branching.py in the folder airflow-data/dags. Airflow 2.0, not 1.10.14 Clone the repo, go into it. Include_examples ( bool) – True to include Airflow’s example DAGs. Then, go to my beautiful repository to get the docker compose file that will help you running Airflow on your computer. Use RepositoryDefinition as usual, for example:ĭagit -f path/to/make_dagster_repo.py -n make_repo_from_dir Parameters :ĭag_path ( str) – Path to directory or file that contains Airflow Dags

From dagster_airflow import make_dagster_definitions_from_airflow_dags_path def make_definitions_from_dir (): return make_dagster_definitions_from_airflow_dags_path ( '/path/to/dags/', )

0 kommentar(er)

0 kommentar(er)